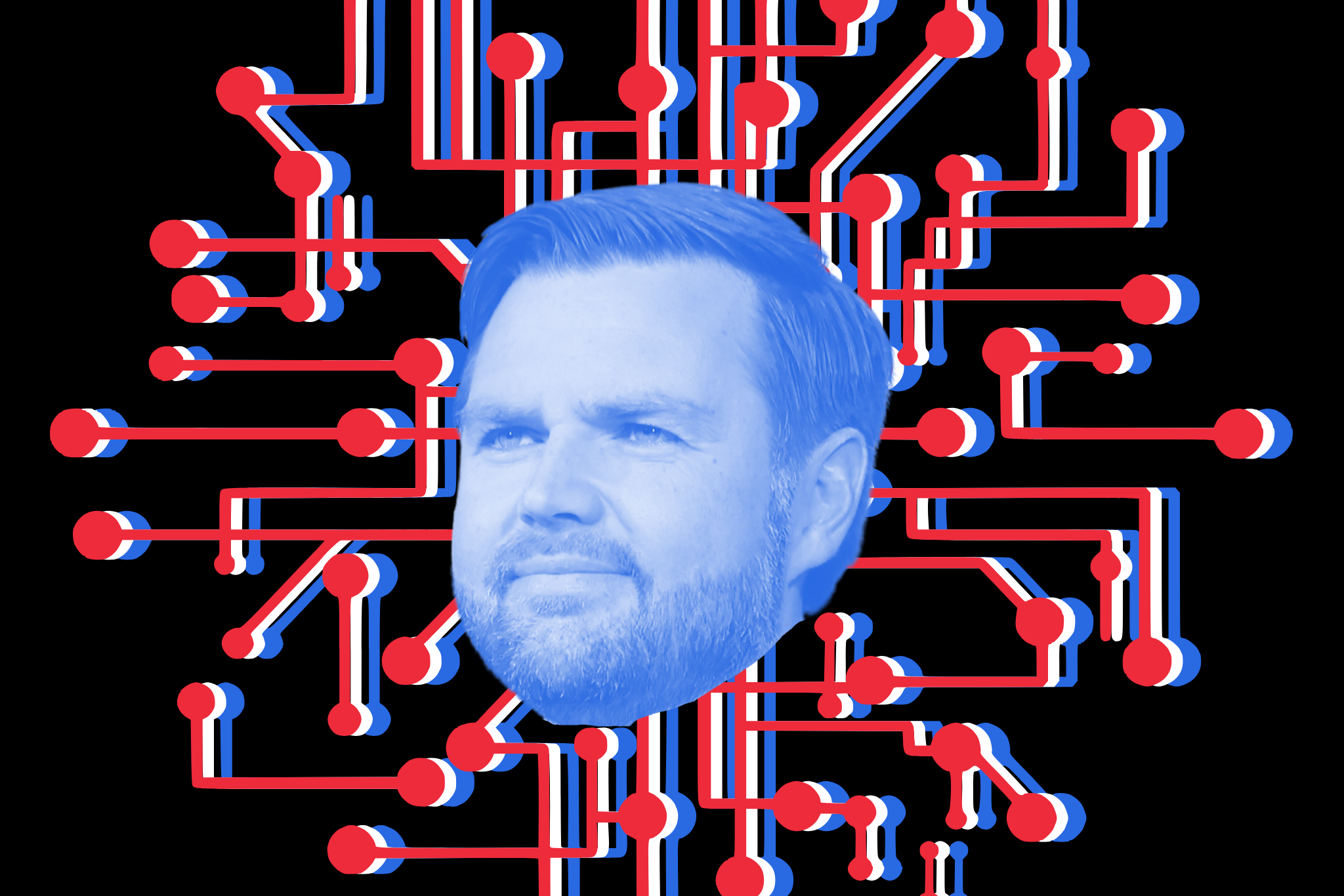

Vice President J.D. Vance sent a clear message to global leaders at the recent AI Action Summit in Paris: The United States is embracing a forward-thinking AI strategy, prioritizing innovation, market dominance, and economic leadership to ensure that it stays ahead of the pack.

The summit brought together major tech executives and world leaders such as French President Emmanuel Macron, Indian Prime Minister Narendra Modi, European Commission President Ursula von der Leyen, and UN Secretary-General António Guterres. They heard Vance deliver an unapologetic declaration: America will not allow itself to be outpaced, outregulated, or outmaneuvered in the AI revolution.

America wants to partner with all of you, and we want to embark on the AI revolution before us with a spirit of openness and collaboration. But to create that kind of trust, we need international regulatory regimes that foster the creation of AI technology rather than strangle it. We need our European friends, in particular, to look to this new frontier with optimism rather than trepidation.

To drive home the point, the U.S. and the United Kingdom elected not to sign a global AI safety pledge endorsed by some 80 countries, choosing instead to signal their commitment to a more dynamic, industry-driven approach that fosters growth and competitiveness rather than excessive regulatory constraints.

Vance’s remarks Tuesday marked a significant departure from the Biden administration’s more risk-averse AI policies. The Trump administration is demonstrating confidence in American ingenuity, positioning AI as a national asset that must be cultivated, not hindered. But what does this change actually mean for U.S. companies operating in the AI sector? What new opportunities and challenges will they face?

A break from regulation-first policies.

Under the Biden administration, concerns about ethical risks, misinformation, and systemic bias largely shaped AI policy. Executive orders emphasized guidelines for responsible AI use, sought to establish safety and fairness benchmarks, and encouraged oversight of large AI models. Many companies faced increasing compliance costs as regulators pushed for greater transparency, risk assessments, and accountability mechanisms.

The Trump administration has already taken steps to erase this framework. Rather than focusing on mitigating risks, it is prioritizing AI as a strategic driver of U.S. economic and technological leadership. Companies can expect a rollback of restrictive regulations, fewer constraints on AI model deployment, and a policy environment that encourages rapid innovation and commercialization.

Some changes U.S. AI companies can expect.

- Faster development cycles: With fewer compliance hurdles, AI firms will likely be able to bring new products to market more quickly. Under President Joe Biden’s executive order, the National Institute of Standards and Technology developed several frameworks to promote the responsible development and deployment of AI technologies. These frameworks, in combination with the U.S. AI Safety Institute’s guidance, would have been onerous. Under the Trump administration, expect less regulatory red tape around data sourcing, model training, and deployment.

- Increased government support: The administration is expected to funnel more funding into AI research, infrastructure, and public-private partnerships. This includes backing major initiatives like the new Stargate project led by OpenAI, Oracle, and SoftBank, which aims to develop next-generation AI infrastructure and computing capabilities. It also means investing in next-generation computing architectures, such as quantum-enhanced AI and Stargate-scale data processing capabilities, to ensure that American companies maintain a decisive technological edge. Companies working on defense, energy, and critical infrastructure AI applications could see a surge in government contracts.

- Empowering innovation over bureaucracy: Under the Biden administration, there were increasing regulatory efforts to ensure AI models were ethically aligned and free from systemic biases. This often led to layers of bureaucratic approval processes that tech firms feared would slow AI deployment and limit experimentation. The new administration seems to be shifting toward a more open and innovation-driven framework, reducing compliance burdens and encouraging AI firms to push boundaries in areas such as generative AI, autonomous systems, and real-time data analytics. By removing barriers to research and application, U.S. companies are likely to have more flexibility to scale their AI models and integrate them into industries ranging from health care to national defense.

- Stronger stance against foreign competitors: Vance made it clear that the U.S. will not tolerate foreign governments limiting American AI dominance. This suggests greater federal action to protect U.S. tech firms from European and Chinese regulatory pressures, along with a more aggressive posture in restricting Chinese AI firms from accessing U.S. technology.

- Energy and infrastructure investment: AI development is resource-intensive, requiring significant computational power. The Trump administration’s pro-energy policies and domestic chip manufacturing push could help AI firms secure the resources they need to scale, ensuring the U.S. remains self-sufficient and independent in AI advancements.

What will stay the same?

Despite the shift in rhetoric, some key elements of AI policy are unlikely to change:

- Export controls on AI chips: The U.S. has already implemented restrictions on advanced semiconductor exports to China, and this policy is expected to continue. These controls, although imperfect, are designed to prevent China from gaining access to high-performance chips that could be used for military or surveillance applications, ensuring that cutting-edge AI technology remains a strategic advantage for the U.S. Additionally, the government is likely to continue tightening restrictions on licensing agreements and partnerships that might indirectly benefit Chinese AI firms. AI firms selling hardware abroad will still face limitations.

- National security concerns: While the administration is promoting AI acceleration, restrictions on foreign investment in sensitive AI projects and scrutiny of AI’s role in cybersecurity will remain priorities.

- State-level regulations: Even if federal oversight loosens, individual states are continuing to push for AI accountability. California, for example, has taken steps toward AI regulation, and this trend could persist regardless of federal policy shifts.

Implications for business strategy.

For U.S. AI firms, the new policy direction presents both opportunities and advantages. Companies that have been constrained by regulatory concerns may now have more freedom to innovate and commercialize their models, positioning the U.S. as a global leader in AI-driven economic expansion.

The challenge for businesses will be navigating global AI markets. While the U.S. is prioritizing acceleration, the European Union remains committed to strict AI oversight, meaning companies operating internationally will still need to contend with divergent regulatory environments.

Ultimately, the message from the AI Action Summit is clear: the U.S. government sees AI as a critical tool for economic and geopolitical advantage, and it is prepared to take a proactive, business-friendly approach to ensure its dominance. For companies, this means a more aggressive, high-speed AI race—one where the rewards for success are substantial—run with the commitment and backing of the government.

Please note that we at The Dispatch hold ourselves, our work, and our commenters to a higher standard than other places on the internet. We welcome comments that foster genuine debate or discussion—including comments critical of us or our work—but responses that include ad hominem attacks on fellow Dispatch members or are intended to stoke fear and anger may be moderated.

With your membership, you only have the ability to comment on The Morning Dispatch articles. Consider upgrading to join the conversation everywhere.