Many parents today are rightly concerned about what their kids see on social media and how much time they spend online. And politicians have noticed. In fact, there are so many parents with those concerns that the political juice was worth the squeeze for members of the Senate Judiciary Committee to drag tech executives to a hearing on Wednesday for questioning. Or, more accurately, to be berated by members in diatribes loosely styled as questions—questions which the executives then weren’t allowed to answer before being cut off by the next harangue.

It was good political theater, especially in an election year. But the spectacle did little to ease the understandable anxieties parents have about their kids’ online lives.

Officially, Wednesday’s hearing was focused on online child sexual exploitation, which everyone agrees is intolerable and demands an urgent response. Law enforcement needs increased resources and new strategies for dealing with this kind of criminal behavior. It’s also important for parents to hear about the worst-case scenarios, especially given that platforms with billions of users will never be able to eliminate all risk. Since parents will remain the first line of defense, the victims who participated in the hearing did a great service to all families.

But the broader issue of social media’s effect on kids’ mental health is separate from criminal acts. That’s the source of much legislation at the federal and state levels, and unsurprisingly where most political points are scored. And yet, while focusing on alleged ties between social media and negative mental health outcomes may work with voters and sound appealing to worried parents, there is no legislative panacea. Most current proposals addressing the technologies—including parental permission laws passed in Arkansas and Utah and proposed in many other states—are likely unconstitutional, and they also come with privacy trade-offs most Americans won’t tolerate. That doesn’t mean there isn’t a problem—it just means that not every problem has a policy solution.

With apologies to my fellow exhausted parents, this one’s on us. Like healthy eating, physical safety from “stranger danger,” binge drinking, and unwanted pregnancies, the dangers of the digital lives of children are best patrolled by parents rather than the government. We will do a better job—if you can believe it—than the politicians who craft one-size-fits-all laws, well-intentioned as they may be. That’s because every kid is different and ready for things at a different age. So, families need to have varying thresholds for acceptable content and time spent online.

Advocates of government intervention point to kids’ social media regulations as an issue that (finally!) both the political left and right can agree on. But widespread agreement on a problem shouldn’t mean additional regulation is necessarily the solution. More productively, the consensus that there’s an issue points to an enticing opportunity for already established companies and entrepreneurs. A marketplace properly imbued with a healthy profit motive won’t ignore a need like that for long.

Thankfully, that process has already begun with parental control tools available at every level of the tech stack. Social media platforms offer family pairing and daily screen time limits that parents can control. Internet service providers give parents the option of blocking age-inappropriate content and turning off connectivity at certain hours. There’s even an app that will tell you everything your child is doing on their device: it tracks their location, manages their screen time, and blocks unwanted content. Let’s see the cable or broadcast television of our childhoods do that.

Parental controls on social media apps, gaming consoles, web browsers, etc., all exist not because we all have to agree on what content kids should access but because we don’t have to agree. Those tools exist as market solutions to parents’ desire to make those decisions. But regulation often blocks market solutions from developing, and laws banning kids from social media would certainly smother the innovation and improvement of parental tools already in progress.

Children will grow up and find themselves in need of digital skills for work, further education, and socialization. It’s better, therefore, to guide them through using social media industriously than to deny it to them until a late age. We all know the sheltered friend from college who went wild with alcohol, socializing, or junk food freshman year. Having been completely denied these things growing up, they developed no knowledge of how to enjoy them responsibly and moderate their behavior. It may be easier for parents in the short-term to have lawmakers just ban social media for minors, but it doesn’t serve the long-term interests of our children.

Parents must work to produce good digital citizens. Like so many other parental charges, it’s not easy—but it’s one of many responsibilities that comes with the great privilege of having children. Responsibly using social media is now another arena of life—like manners, sleep, and hygiene—to be mastered with parental guidance. The internet isn’t going away, so we’d best equip our children to extract its maximum benefit and avoid its pitfalls.

Americans, increasingly interested in school choice and influencing curricula at the local level, seem to understand that outsourcing education entirely to governments isn’t ideal. There’s no evidence to suggest that ceding parental authority would be any more successful online—but there is plenty of proof of what would be sacrificed in the attempt.

Banning kids below a certain age from social media would necessitate some age-verification mechanism—and not just for children, but everyone using the internet. In practice, that proof would likely be a government I.D. or biometric face scanning. Platforms or third-party verification services would collect and store a great deal more of Americans’ personal information. These privacy and security implications run counter to citizens’ oft-expressed interest in more privacy. A 2023 Pew survey found that 81 percent of Americans felt that companies will use their data in ways with which they are not comfortable, particularly as the use of artificial intelligence becomes more widespread.

Age verification laws currently in circulation would also mean the end of anonymous speech online. While “trolls” can be irritating, no doubt, anonymous speech has played a critical role in our country. The framers who advocated the ratification of our Constitution signed the Federalist Papers as “Publius,” not as Alexander Hamilton, John Jay, and James Madison. Since then, the Supreme Court has many times affirmed that anonymous speech is protected by the First Amendment. Those protections are as critical in the era of cancel culture as they ever have been. It is a hallmark of a free society that the most unpopular and controversial speech is protected, guarding minority opinions against the tyranny of the majority.

First Amendment protections are already proving problematic for efforts by state’s around the country to restrict kids online. Courts have halted laws in California, Arkansas, and Ohio, finding that the measures are unlikely to survive free speech scrutiny. Utah officials are so convinced their law won’t be found constitutional that they stopped its implementation themselves. Federal proposals discussed at this week’s Senate hearing—like the EARN IT Act and the Kids Online Safety Act—are riddled with their own constitutional problems.

Perhaps Americans are prepared to sacrifice parental authority, privacy, and anonymous speech so that kids can have a safer space online. But we should be very confident that social media is the driver of increased youth depression rates before taking these steps.

The problem is, we’re not.

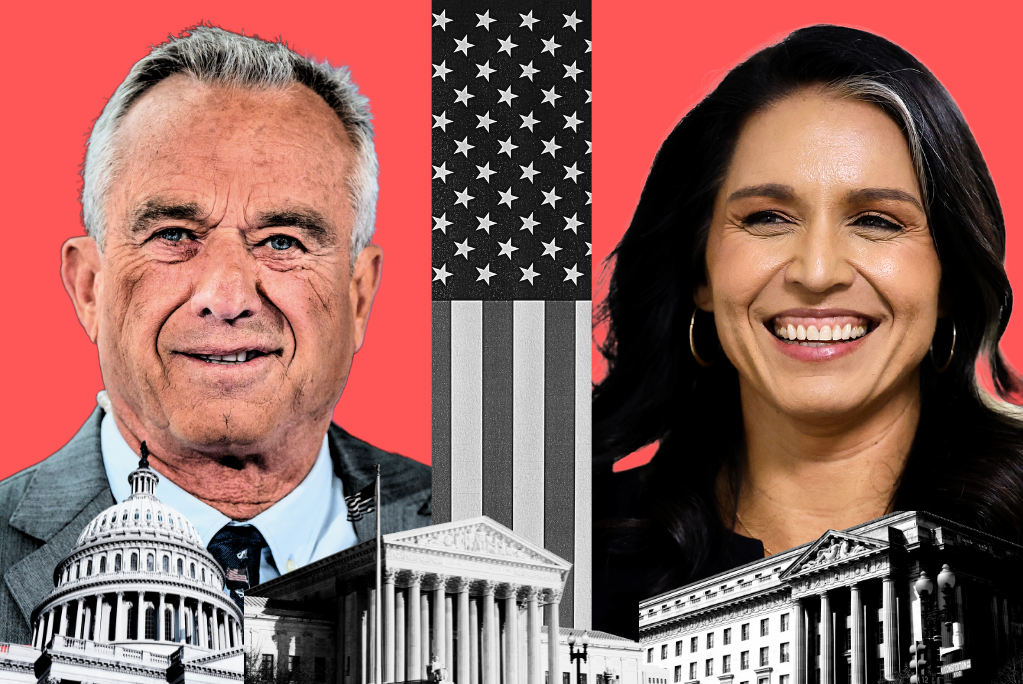

Opposing Debate

Studies about youth mental health and social media suffer from the same flaws as now-debunked studies attempting to link video games and real-world violence from decades ago. Those studies were correlational, not causal, and involved suspect self-reporting from kids. Accordingly, the Supreme Court found the data insufficient to justify restrictions on free speech. Proposed social media laws relying on similarly structured studies will likely suffer the same fate of being declared unconstitutional.

The much-discussed internal study on youth mental health from Meta, raised again at the hearing by Missouri Republican Sen. Josh Hawley, has been said to make the question of harm to teenage girls from social media a “closed case” because it showed some negative impact. But in full context, the study’s findings are more nuanced. Meta examined 12 areas, including anxiety, eating, loneliness, and sadness. In 11 out of 12, teenage girls reported feeling better after interacting with Instagram. Only on the topic of body image did the results vary, but still found that a majority of girls who struggle with the other 11 issues felt better or unaffected by Instagram.

According to the American Psychological Association, “Using social media is not inherently beneficial or harmful to young people.” It’s true that rates of adolescent depression and anxiety have been on the rise in recent years, but we’ll probably never be able to quantify exactly what contributes to today’s adolescent malaise. It may be that closing schools for up to two years, isolating children from their routines and normal socializing, contributed. It may be that dire warnings about global warming as an existential threat isn’t putting kids in a particularly cheery mood. Perhaps the 12 percent decline in church attendance over the last 25 years has contributed to feelings of hopelessness and isolation. It’s difficult to isolate these factors, and others, from the possible effects of social media.

Just as there are no shortcuts in the hard work of legislating within the bounds of the Constitution, there are no shortcuts in the hard work of raising digitally savvy and responsible young adults. Luckily, parents know their kids’ individual needs best and are most motivated to help them stay safe online—even on top of figuring out what to make for dinner and all that laundry we have to do.

Please note that we at The Dispatch hold ourselves, our work, and our commenters to a higher standard than other places on the internet. We welcome comments that foster genuine debate or discussion—including comments critical of us or our work—but responses that include ad hominem attacks on fellow Dispatch members or are intended to stoke fear and anger may be moderated.

With your membership, you only have the ability to comment on The Morning Dispatch articles. Consider upgrading to join the conversation everywhere.