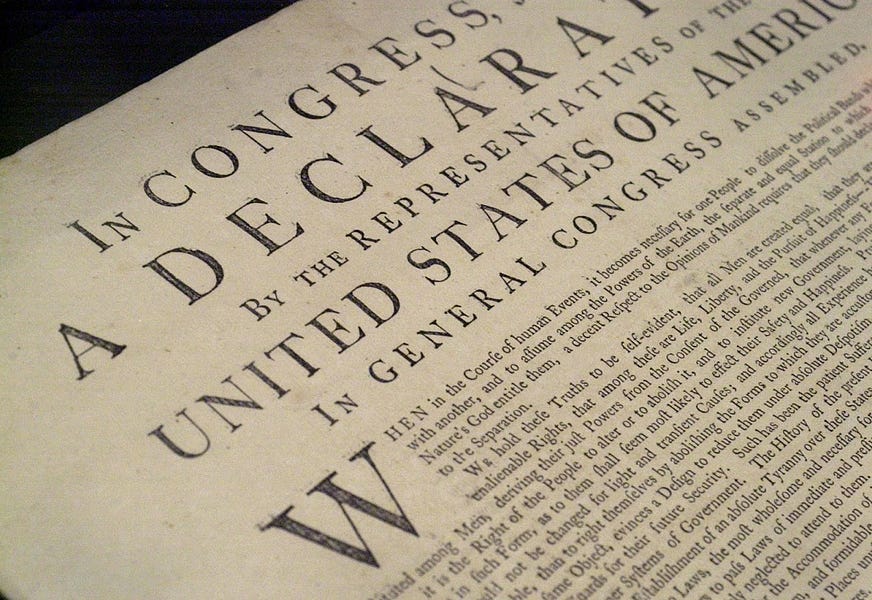

One question Sen. Ben Sasse posed to Judge Amy Coney Barrett last week might seem odd at first: What role does the Declaration of Independence play, he asked, when interpreting the Constitution? To anyone who remembers high school civics, the answer seems obvious: The Declaration is one of the America’s founding documents; it sets forth the “self-evident truths” on which the nation was founded. Why would it not play a role in understanding the Constitution?

Yet the Declaration’s legal status is actually a subject of debate among lawyers, and Judge Barrett’s answer was a disappointing indication that, like her mentor Justice Antonin Scalia, she sees the Declaration not as the basis American constitutional law—which it is—but as a merely political statement. “While the Declaration of Independence tells us a lot about history and the roots of our republic,” Barrett said, “it is not binding law.”

That’s not true—and it matters, not just to constitutional law nerds, but to how we understand and implement the most important aspects of our legal system.

To begin, the Declaration is quite clearly “law.” Federal laws are published in the United States Statutes at Large, and are also usually—but not always—codified in the United States Code. This latter is the “U.S.C.” that you often see on legal documents, but the United States Code is actually not our most important legal publication. Instead, if there’s a conflict between how something appears in the U.S.C. and the Statutes at Large—which does sometimes happen—it’s the Statutes at Large that takes precedence. (The law itself says so: 1 U.S.C. § 112.)

What, then, appears on Page 1, Volume 1 of the Statutes at Large? The Declaration of Independence. (It also appears in the U.S.C., on page xlv of Volume 1.)

If that’s not enough to make it law, what would be? Adoption by the legislature? But the Declaration was adopted by the Continental Congress, which was then the nation’s legislature—and nobody denies that other things the Continental Congress passed were “laws.” The Declaration clearly had legal consequences: It proclaimed that the United States was a separate sovereign, with power to “make war, conclude peace, contract alliances,” and so forth, all of which the nation proceeded to do. Surely being adopted by a legislature and having recognized legal consequences makes the Declaration “law.”

Skeptics might answer that those who say the Declaration isn’t law mean that it doesn’t command anyone to do anything. But many laws don’t command people to do things. Laws regulating how wills are made, or how corporations are formed, are still laws, even though they don’t force anybody to do anything. Perhaps the skeptics mean that the Declaration doesn’t set forth rules and consequences—but that’s not really true. It specifies general rules (the “self-evident truths”), and declares that “whenever any form of government becomes destructive of these ends,” certain consequences—specifically, reform or revolution—should follow.

And anyway, not all laws enunciate rules and consequences. Many are “aspirational,” meaning that they declare public policy in broad terms, so that public officials can aim at accomplishing those purposes. For instance, 42 U.S.C. § 18351(a)—governing American participation in the International Space Station (ISS) program—declares: “It shall be the policy of the United States, in consultation with its international partners in the ISS program, to support full and complete utilization of the ISS through at least 2024.” If that’s a law, surely the Declaration is, too.

In fact, setting forth aspirations is one of law’s most essential roles. The Founding Fathers knew that, which is why they declared (in the words of the Virginia Declaration of Rights, which is also a law) that “free government” depends on a “frequent recurrence to fundamental principles.” Laws often enunciate “fundamental principles” to guide officials in exercising their duties.

The Constitution does this too. Nobody would deny that the Ninth and Tenth Amendments are laws—yet they don’t command people to act, or impose consequences for rule-breaking. Rather, they were written to tell future generations how to interpret the Constitution. The Ninth says that just because an individual right isn’t specifically mentioned in the Constitution doesn’t mean it lacks constitutional protection. And the Tenth says that if the Constitution gives the federal government no power to do something, that thing remains a matter for states to handle. Lawyers often speak of Supreme Court decisions “applying the Tenth Amendment,” when what they mean is that the court consulted the principle articulated in that amendment when deciding how to rule. But if that makes sense, it makes equal sense to speak of “applying” the Declaration of Independence as law when courts rely on its principles of liberty and equality.

And courts have often done that. As R.H. Helmholz observes in Natural Law in Court, American lawyers routinely invoked the Declaration’s principles in legal arguments throughout the 19th century—even in cases involving “mundane questions related to family law and the law of inheritance.” Justice Clarence Thomas often invokes the Declaration as a guidepost for interpreting the Constitution—for example in Grutter v. Bollinger, in which he concluded that racial discrimination by government is unconstitutional. State courts have also frequently used the Declaration to guide their decisions. In 2006, Washington Supreme Court Justice Richard Sanders argued that the principle of “no taxation without representation” was a component of the Declaration, and given that Washington state was required (as all states since 1864 have been) to ensure that its Constitution was consistent with the Declaration in order to be admitted to the union, the state Constitution should be viewed as barring unelected officials from imposing taxes. Texas Supreme Court Justice James Blacklock wrote last year that a parent’s right to care for her child is one of the inalienable rights referenced by the Declaration and, consequently, that the Texas Constitution protects the rights of “the autonomous nuclear family.”

Unsurprisingly, judges sometimes disagree over how the Declaration’s principles apply to specific cases. Alabama’s former Chief Justice Roy Moore thought its reference to “life” meant that the practice of abortion should be entirely outlawed. The Kansas Supreme Court, by contrast, relied on the Declaration last year in holding that abortion rights are protected by that state’s Constitution because the concept of “liberty” includes “the right of personal autonomy, which includes the ability to control one’s own body.” Some see disagreements like these as proof that the Declaration can’t really be law—but judges disagree all the time, and their disagreements are often beneficial, since they help focus legal arguments and democratic debates on what we mean by freedom or how we view the proper role of government. What exactly is “due process of law”? What does “reasonable” mean in the Fourth Amendment? In law, as in science, asking the right question is often half the job.

That’s the Declaration’s primary role: to set out the basic principles of American nationhood. These principles are not just good advice for guiding our political and legal institutions, but, like the provision of a corporate charter that specifies the objectives of that corporation, they impose genuine limits on the boundaries of legitimate authority.

This is why the Declaration played its most dramatic legal role in disputes involving slavery—such as the 1839 Amistad case, in which lawyer and former president John Quincy Adams, representing a group of Africans who violently rebelled against some slave traders, successfully invoked the Declaration to justify his clients’ killing those who tried to enslave them. The Declaration—or rather, its absence—played an even more pivotal role in Dred Scott, when Chief Justice Roger Taney was compelled to ignore its actual words in order to claim that America’s Founders never thought black people could be citizens—and therefore that they could not sue for their freedom.

The consequences of Taney’s perversion of the Declaration’s language speak for themselves. As Abraham Lincoln put it after the decision was released, “If that declaration is not the truth, let us get the Statute book, in which we find it, and tear it out! Who is so bold as to do it?”

Sadly, today’s lawyers—both liberal and conservative—are that bold. To most modern attorneys, judges, and law professors, the Declaration is merely a “political” document—material for patriotic speeches, perhaps, but not legally significant. The reason is that they have largely adopted the 20th-century philosophy of “positivism,” which rejects the Declaration’s natural law principles and holds that ideas about justice or morality are merely matters of subjective personal (or collective) taste. When a legislature outlaws murder, it does so not because murder violates someone’s rights, but because voters just happen to feel that murder is wrong—nothing more.

As the positivist Chief Justice William Rehnquist declared, “[if] a society adopts a constitution and incorporates in that constitution safeguards for individual liberty, these safeguards indeed do take on a generalized moral rightness or goodness … [not] because of any intrinsic worth nor because of any unique origins in someone’s idea of natural justice but instead simply because they have been incorporated in a constitution by the people.”

Of course, under that theory, any constitution whatsoever that “a society adopts”—including Nazi or Soviet constitutions—are equally “good.”

The pioneer of positivism in American law was Justice Oliver Wendell Holmes Jr., who put the point succinctly in 1905: Constitutions, he said, are “made for people of fundamentally differing views.” But this is entirely false: Constitutions are made for people who agree on fundamentals, but differ on the particulars. The role of the Declaration is to enunciate the shared fundamentals of our constitutional order—while leaving the particulars to be decided by the political process established in the Constitution.

Today, however, both progressives and many conservatives, including devoutly religious ones, embrace positivism—and its accompanying moral relativism—out of either an ignorance of how natural law works, or a fear that placing individual rights beyond the reach of government will invite “judicial activism.”

Progressivism sees rights essentially as permissions handed out by government. “Democracy” exists to satisfy the people’s desires by fashioning new “rights” (actually, entitlements) to meet demand. To limit a government’s power—by specifying that there are some lines even democracy may not cross—is therefore anathema.

Many on the right essentially agree. Justice Scalia, for example, feared that “activist” courts would use natural law to force judges’ political views on the people. That led Sclia to take refuge in an appeal to majoritarianism and relativism instead, arguing that “the whole theory of democracy … is that the majority rules.” That, he said, means “if the people, for example, want abortion, the state should permit abortion. If the people do not want it, the state should be able to prohibit it.” In other words, there are no truths, self-evident or otherwise: There’s just the will of the majority, and those few permissions the majority deigns to give the minority, calling them “rights.”

That appeal to “democracy,” however, actually commits the same fallacy that positivists accuse the Declaration’s authors of committing. After all, why is “the will of the majority” any better a badge of legitimacy than the Declaration’s truths? There are only two possible answers: Either there is no such thing as legitimacy at all—in which case all government is entirely arbitrary, and we can have no moral obligation to respect it—or there is some true philosophical proposition that gives it legitimacy. But if there are any true philosophical propositions, then there’s nothing offsides about the Declaration purporting to articulate its own true propositions.

This might all seem abstract, but law is an abstract enterprise. It’s about subordinating human action to the government of rules—and in the case of constitutional law, it’s about subordinating government itself to rules. Differentiating between good and bad rules, however, requires us to consider basic principles of justice—and the Declaration’s role is to specify those principles. Constitutional provisions are sometimes phrased in broad terms (“equal protection of the law,” “commerce among the several states,” “the general welfare”) but it’s a judge’s job to figure out what those terms mean and apply them in deciding cases, and the Declaration helps guide that effort. Ignoring that guide leaves government officials—including judges—incapable of ensuring that their efforts fall within the limits and purposes that give government itself its legitimacy. At a time when leaders of both parties are advocating enormously intrusive programs of government expansion and control—over freedoms of speech, privacy, private property, and so much more—nothing could be more important than the fact that our nation’s most basic law specifies the limits of government authority.

Timothy Sandefur is vice president for Litigation at the Goldwater Institute and author of The Conscience of the Constitution: The Declaration of Independence and the Right to Liberty.

Photo By Joey McLeister/Star Tribune via Getty Images.

Editor’s note: This piece has been updated.

Please note that we at The Dispatch hold ourselves, our work, and our commenters to a higher standard than other places on the internet. We welcome comments that foster genuine debate or discussion—including comments critical of us or our work—but responses that include ad hominem attacks on fellow Dispatch members or are intended to stoke fear and anger may be moderated.

With your membership, you only have the ability to comment on The Morning Dispatch articles. Consider upgrading to join the conversation everywhere.